Understand AI Trust Signals – Why LLMs Trust Some Brands and Ignore Others (And How to Fix It)

If you’re trying to “rank” in AI search the way you ranked in Google ten years ago, this post will feel uncomfortable. And well, that is a good thing.

Because AI search—whether it’s Google AI Overviews, ChatGPT, Perplexity, or Claude—doesn’t work on rankings first.

It works on trust first. And trust, in AI systems, is not a vibe. It’s a set of signals.

Let’s slow this down and unpack it properly.

| TL;DR: The AI Search Reality Check AI doesn’t rank pages. It repeats ideas it trusts. You can rank #1 on Google and still be invisible to LLMs. Consistency beats cleverness every time in AI answers. If others don’t reference you, AI probably won’t either. Fresh content helps. Proven thinking helps more. Brands don’t earn trust. Patterns do. If your insight sounds fragile without your logo, AI won’t echo it. |

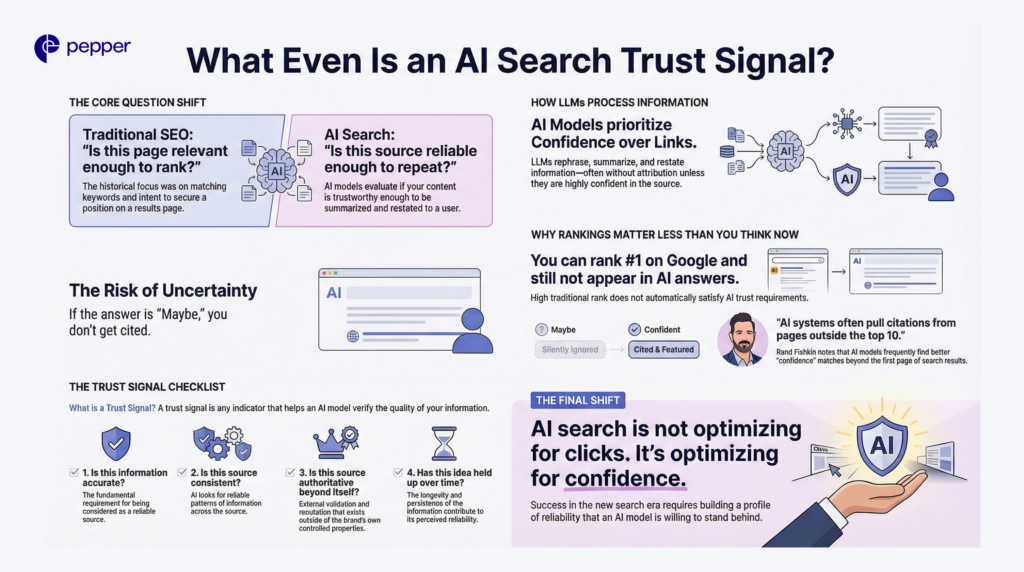

First: What Even Is an “AI Search Trust Signal”?

In traditional SEO, the question was: “Is this page relevant enough to rank?”

In AI search, the question is closer to: “Is this source reliable enough to repeat?”

LLMs don’t just link to pages. They rephrase, summarize, and restate information—often without attribution unless they’re confident.

So a trust signal is anything that helps an AI model answer:

- Is this information accurate?

- Is this source consistent?

- Is this source authoritative beyond itself?

- Has this idea held up over time?

If the answer is “maybe,” you don’t get cited. You just get silently ignored.

Why Rankings Matter Less Than You Think Now

One uncomfortable truth many SEO teams are discovering: You can rank #1 on Google and still not appear in AI answers.

Rand Fishkin has pointed this out repeatedly—AI systems often pull citations from pages outside the top 10, sometimes even outside page one.

Why? Because AI search is not optimizing for clicks. It’s optimizing for confidence.

The Core AI Search Trust Signals (Explained Like You’re Five)

Let’s break these down without jargon.

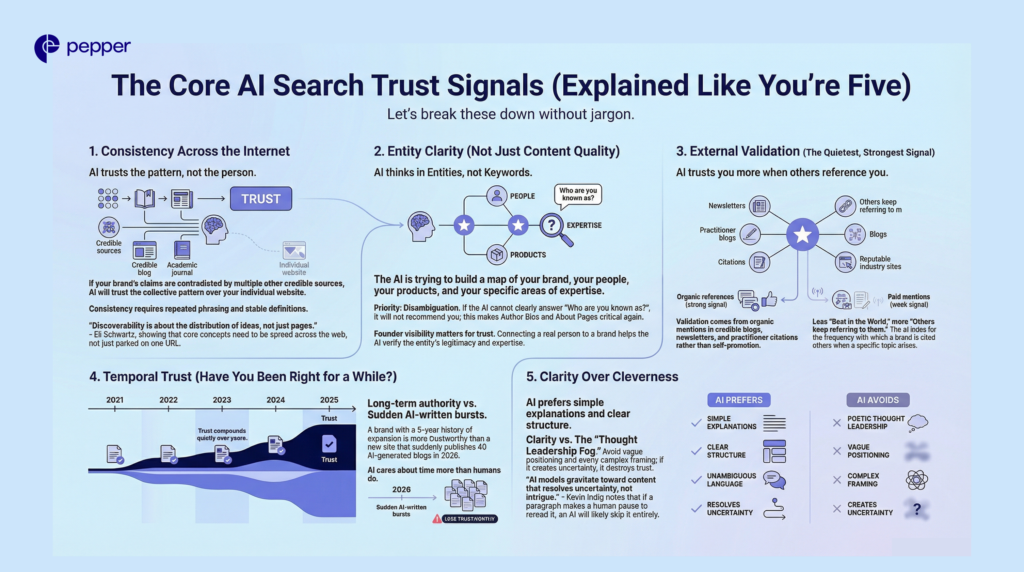

1. Consistency Across the Internet

If your brand says one thing, but five other credible sources say something slightly different—AI trusts the pattern, not you.

AI systems love:

- Repeated phrasing

- Stable definitions

- Ideas that show up in multiple places over time

This is why brands that publish once and disappear don’t show up in LLM answers.

As Eli Schwartz often notes, discoverability today is about the distribution of ideas, not just pages.

2. Entity Clarity (Not Just Content Quality)

AI doesn’t think in keywords. It thinks in entities.

That means:

- Your brand

- Your people

- Your products

- Your expertise areas

If AI can’t clearly answer “who are you known as?”, it won’t trust you.

This is why:

- Author bios matter again

- About pages matter again

- Founder visibility matters again

Not for vanity but for disambiguation.

3. External Validation (The Quietest, Strongest Signal)

Here’s the part most brands miss. AI trusts you more when others reference you, not when you talk about yourself.

This includes:

- Mentions in credible blogs

- Quotes in newsletters

- Being cited by practitioners

- Appearing in roundups without paying for them

Think less “best X in the world.” Think more, “others keep referring to them when this topic comes up.”

4. Temporal Trust (Have You Been Right for a While?)

AI cares about time more than humans do.

A brand that:

- Published thoughtful content in 2021

- Updated it in 2023

- Expanded it in 2025

…is far more trustworthy than a brand that suddenly appeared in 2026 with 40 AI-written blogs. Trust compounds quietly.

5. Clarity Over Cleverness

This one stings for content marketers.

AI prefers:

- Simple explanations

- Clear structure

- Unambiguous language

Not:

- Thought leadership fog

- Vague positioning

- Overly poetic framing

Kevin Indig has mentioned that AI models gravitate toward content that resolves uncertainty, not content that creates intrigue. If a paragraph makes a human pause and reread—AI probably skips it.

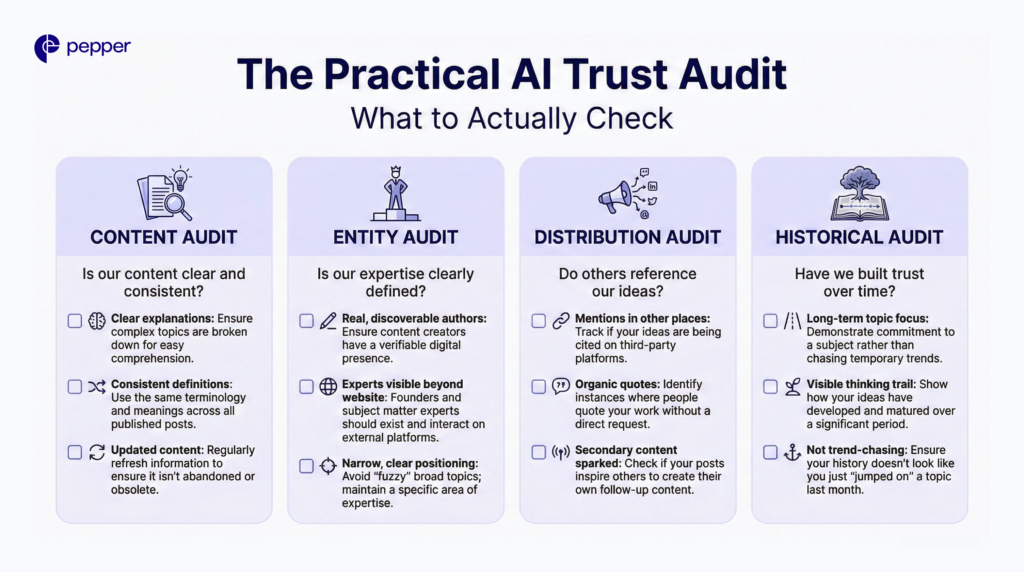

The Practical AI Trust Audit (What to Actually Check)

If you’re a marketer trying to appear in LLM citations, here’s what to audit—honestly.

🔍 Content Audit

- Do we explain concepts clearly, or just sound smart?

- Are definitions consistent across posts?

- Is the content updated or abandoned?

🔍 Entity Audit

- Are authors real and discoverable?

- Do founders and subject experts exist outside the website?

- Is our expertise narrow and clear, or broad and fuzzy?

🔍 Distribution Audit

- Are our ideas referenced anywhere else?

- Do people quote us without us asking?

- Do our posts spark secondary content?

🔍 Historical Audit

- How long have we been talking about this topic?

- Is there a visible trail of thinking?

- Or does it look like we jumped on the trend last month?

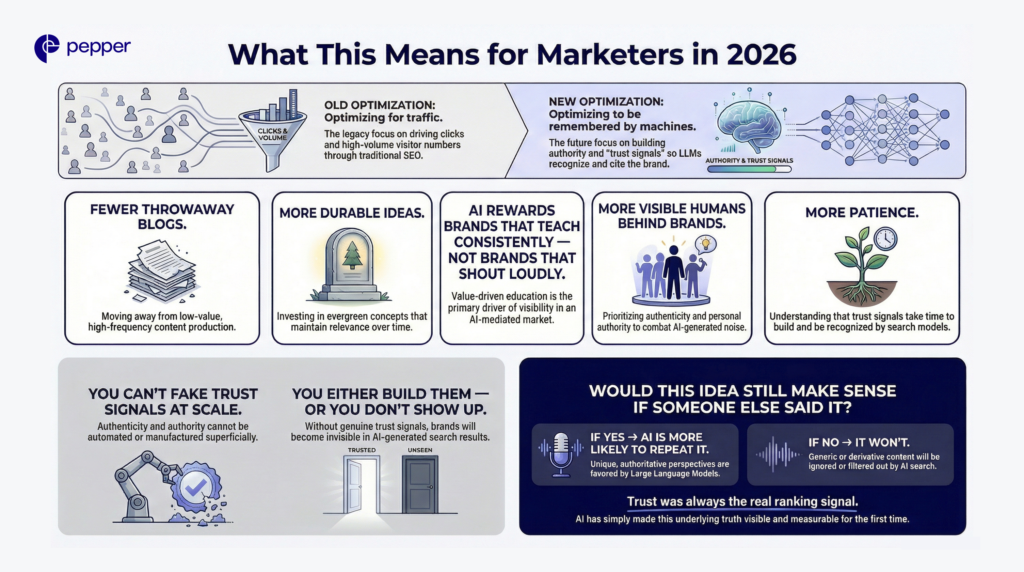

What This Means for Marketers in 2026

Here’s the quiet reframe: you’re no longer optimizing for traffic. You’re optimizing for being remembered by machines.

That means:

- Fewer throwaway blogs

- More durable ideas

- More visible humans behind brands

- More patience

AI search rewards brands that teach consistently, not brands that shout loudly.

And the uncomfortable truth? You can’t fake trust signals at scale. You either build them or you don’t show up.

A Final Thought

AI didn’t kill SEO. It exposed the parts that were fragile. Trust was always the real ranking signal. AI just made it visible and measurable.

If you want your brand to show up in LLM answers in 2026, the starting point isn’t keywords or clever prompts. It’s a much simpler test, and it’s the one Pepper uses when auditing AI search visibility:

Would this idea still make sense if someone else said it?

If the answer is yes, AI is far more likely to repeat it. If it’s no, it won’t. And it won’t explain why.

That’s the gap Pepper helps teams close—by turning trust signals into something you can actually audit, strengthen, and scale.

FAQs

Q. How does AI search decide which sources to trust?

AI models evaluate consistency, external validation, entity clarity, and historical accuracy across the web. Instead of ranking pages, they prioritize sources that feel reliable enough to repeat confidently.

Do Google rankings still matter for AI search visibility?

They matter less than before. Many AI answers pull from sources outside the top 10 results because models optimize for confidence and consensus, not click-through potential.

Q. What are the most important AI search trust signals in 2026?

Consistency across sources, clear entity identity, third-party mentions, and content longevity matter most. Brands that demonstrate expertise over time are more likely to be cited by LLMs.

Q. How can marketers audit AI trust signals for their brand?

Marketers should assess content clarity, author credibility, external references, and historical depth. Tools like Pepper’s Atlas help make these signals visible and actionable across AI search surfaces.

Get your hands on the latest news!

Similar Posts

Artificial Intelligence

6 mins read

Is Your Brand Visible in AI Search Results? Here’s How to Find Out

Artificial Intelligence

6 mins read

AI is Eating Clicks — Here’s the Visibility Strategy That Still Works

Artificial Intelligence

7 mins read